Spectra Logic has created the BlackPearl Certification Program to ensure that the BlackPearl clients created by our partners are robust and reliable. We have provided detailed information on how to get certified. All partners should strive to have their BlackPearl clients certified. We are now certifying our first clients and will be announcing them soon. If you are interested in having your client certified, please Contact Us.

Change to Package Structure in Java 3.x RC SDK

The Java 3.0 and 3.2 Release Candidate (RC) SDKs are available and some partners and customers are now trying them out. Yesterday we had to make a change to the package structure in these SDKs to make it very clear which classes are auto generated and which are not by moving the classes that are not auto generated to different packages. You can view the details in this GitHub pull request. Most users probably aren’t yet using these classes, but if they are, they will need to update their code to support the new package names. Contact us on the forums if you have any questions or concerns.

Developer Program Team at NAB 2016

The biggest trade show in the Media and Entertainment arena, NAB 2016, is coming next month to Las Vegas, and Spectra Logic will be there in full force. The Spectra Logic Developer Program team will also be on site to answer your questions and discuss your BlackPearl client integration plans. Included in our team will be Ryan Moore, BlackPearl SDK Engineering Manager, and yours truly, BlackPearl Developer Evangelist Jeff Braunstein. Come visit us at booth SL11816 and tell us about your plans to integrate with BlackPearl.

New Article About Exceptions and Unexpected Conditions

Any software application has to deal with errors, exceptions, and unexpected conditions. BlackPearl client developers must consider what unexpected conditions and exceptions may occur in their clients and have the client respond to them appropriately. We have created a new article that reviews the most common unexpected conditions in a BlackPearl client and how to respond to them appropriately. We recommend all developers read it and ensure that their clients follow these guidelines.

BlackPearl 3.0 Now Shipping; Updated SDK, Simulator, Documentation

We are happy to announce that Version 3.0 of BlackPearl has been released! It is now shipping on new BlackPearl hardware, and it is available to existing BlackPearl customers. Existing BlackPearl customers should work with Spectra Logic Support to ensure that the upgrade goes smoothly.

We explained the main new features of BlackPearl in two recent blog posts:

- BlackPearl 3.0 New Features Part 1: ArcticBlue and Advanced Bucket Management

- BlackPearl 3.0 New Features Part 2: Access Control Lists

To assist developers with building BlackPearl 3.0 clients, we have released the following new tools and resources:

- 3.0 Simulator -- simulates a working BlackPearl for easy development

- 3.0 API Reference -- review the operations supported by BlackPearl’s API

- 3.0 Java SDK -- Use the new SDK to take advantage of all the new BlackPearl features

- 3.0 Java SDK Javadocs -- Learn about all the SDK classes, methods, properties, and events

Over the coming weeks we will be releasing 3.0 versions of our SDKs in our remaining languages -- .NET/C#, C, and Python. Note that all of the existing 1.x SDKs work fine with BlackPearl 3.0, they just don’t yet have access to the new 3.0 features.

We will be releasing more resources and information over time to help you more easily build BlackPearl 3.0 clients.

BlackPearl Object Naming

As with most cloud storage systems, when a file is uploaded to BlackPearl we call it an “object”. There are some constraints around how objects are named in BlackPearl due to it often being connected to a tape system that stores data using the open LTFS format.

When you configure BlackPearl, you set up Storage Domain(s), which are collections of tape and/or disk partitions. If the Storage Domain includes tape partition(s), you must specify the “LTFS File Name” option for the Storage Domain. This option specifies how the file is named when it is placed on tape. There are two options for the LTFS File Name:

- Object Name — LTFS file names use the format {bucket name}/{object name}, for example bucket1/video1.mov. Object names must comply with LTFS 2.4 specification file naming rules. The file name must be 255 characters or less. If the tapes are ejected from the BlackPearl gateway and loaded into a non‐BlackPearl tape partition, the file names match the object names. The colon character (:) is not allowed in LTFS file names and therefore not allowed in BlackPearl object names. The slash character (/) is also technically not allowed in LTFS files names; however, in BlackPearl a slash is allowed in the object name and will get translated as a directory in the LTFS file system (e.g. directory1/directory2/video1.mov). The following characters are not recommended in LTFS file names or BlackPearl object names for reasons of cross-platform compatibility: control characters such as carriage return (CR) and line feed (LF), double quotation mark (“), asterisk (*), question mark (?), less than sign (<), greater than sign (>), backslash (\), vertical line (|). Note that per the LTFS specification, “Implementations which claim compliance with version 2.4.0 or later of this specification shall support the percent-encoding of names … in order to avoid issues with the characters listed [above].”

- Object ID — LTFS file names use the format {bucket name}/{object id}, for example bucket1/1fc6f09c‐dd72‐41ea‐8043‐0491ab8a6d82. Object names do not need to comply with LTFS file naming rules. The object names are saved as LTFS extended attributes allowing any third party application to reconstruct all the data including the object names.

When building a BlackPearl client, developers should be prepared for customers to choose either LTFS File Name option, and thus should ensure that object names are LTFS compliant as described in the first option above.

Java CLI Updates – Symbolic Link Support, Performance Test

We have released an update to the Java CLI BlackPearl client. You can download this latest release (1.2.4) from the Java CLI Releases page on GitHub. There are several updates in the new release, including two major new features detailed below.

Symbolic Link Support

Support was added for Symbolic Links in Unix/Linux. This is relevant in the case where the put_bulk command is used to move a directory of files to BlackPearl. If the Java CLI sees that the directory contains a symbolic link, it will attempt to also include the file to which the symbolic link points (even if it is not in that directory). Here are a few important notes regarding symbolic link support:

- The user account under which the Java CLI is being run must have access to the file to which the symbolic link is pointing.

- If there are several symbolic links pointing to the same file, but from different folder paths, BlackPearl will include a copy of the file for each symbolic link because each “file” is in a different folder path.

- When the CLI restores the file from BlackPearl, it will restore the actual files, not the symbolic links. This is because BlackPearl is not aware that it is a symbolic link.

Performance Test

We added a new performance command to the CLI. We hope this new performance test will make it easier to troubleshoot BlackPearl performance. This command tests network performance as well as the performance to the BlackPearl cache. This does not test the back-end performance of the BlackPearl (speed between BlackPearl and tape library or permanent disk target).

Users can specify the number and size of files in the test. The files are automatically generated on the fly by the CLI and not written to disk (this eliminates the disk read/write speed from the test). When the command is executed, the CLI will send these files to BlackPearl in a PUT operation. Once the PUT operation is complete, the CLI will then issue a GET operation to retrieve those same files from BlackPearl.

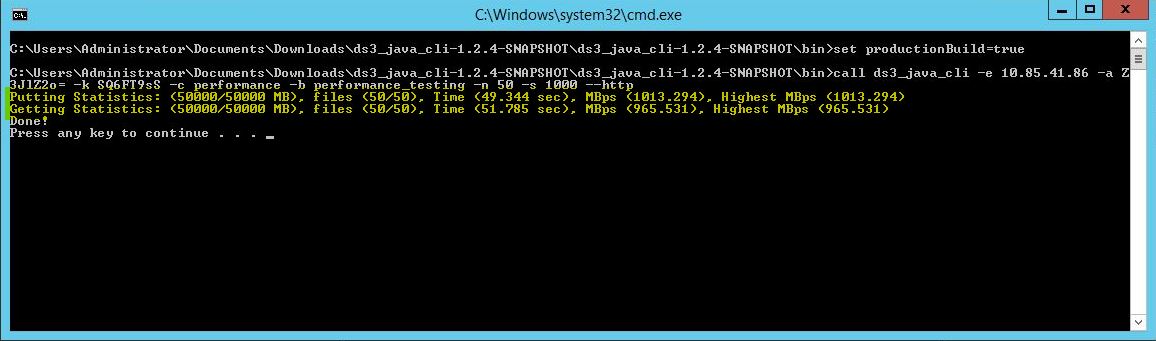

Here is an example of the performance command being run. In this case there were 50 files, each 1000MB, using a bucket called “performance_testing”:

ds3_java_cli -e 10.85.41.86 -a Z3JlZ2o= -k 4QJFANLi -c performance -b performance_testing -n 50 -s 1000 --http

Here is the output from the CLI (results highlighted in yellow):

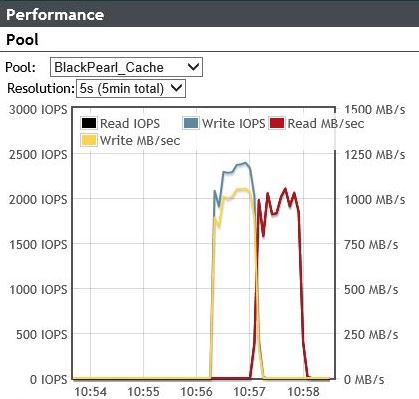

Here is the corresponding performance graph from the BlackPearl web management interface.

We hope these new features will make it easier to use BlackPearl. Please give us your feedback or ask questions on our Forums.

BlackPearl “Chunks” and “Blobs”

BlackPearl uses the Spectra S3 protocol to manage files. We receive many questions on how files interact with BlackPearl using this protocol, and particularly how files are broken up for moving them to and from BlackPearl. Hopefully this post will help clarify the topic.

Jobs, Chunks and Blobs

A job is a management container for file input/output operations with BlackPearl. A job must target a single bucket. So, for example, a client might start a job to send 20 files to a bucket in BlackPearl.

A chunk is a unit into which jobs are broken by BlackPearl. A chunk consists of one or more blobs (see below). The default chunk size is dynamic based on the storage target.

- If the storage target is tape, then the chunk size will be 2% of the uncompressed tape capacity. So for example, an LTO-7 uncompressed tape’s capacity is 6 trillion bytes, so the chunk size for LTO-7 would be 2% of this or approximately 112GB.

- If the storage target is disk (e.g. ArcticBlue or Online Disk), then the preferred blob size (64GB, see below) is used as the chunk size.

If the total job size is less than or equal to the chunk size, there will only be one chunk for the job. Note that the BlackPearl cache is managed by allocating space for each chunk on a PUT or GET request.

A blob is a file or file part sent to or received from BlackPearl in one PUT or GET operation. A blob will never consists of more than one file. One or more blobs will make up a chunk. The preferred blob size is 64GB, but this size can vary to match the file size or fit in a chunk, and the size can also be changed by the client.

Example -- Let’s assume you have a 500GB job to upload to BlackPearl with a number of files (1 250GB file, 1 125GB file, 5 25GB files). The storage target is a tape library using LTO-7 tapes. Let’s also assume the chunk size is 112GB and the blob size is 64GB. The job will be broken up into five chunks that look like this:

- Chunk 1 -- Size: 112GB

- Blob 1 -- Part 1 of 250GB file -- Size: 64GB

- Blob 2 -- Part 2 of 250GB file -- Size: 48GB

- Chunk 2 -- Size: 112GB

- Blob 1 -- Part 3 of 250GB file -- Size: 64GB

- Blob 2 -- Part 4 of 250GB file -- Size: 48GB

- Chunk 3 -- Size: 112GB

- Blob 1 -- Part 5 of 250GB file -- Size: 26GB

- Blob 2 -- Part 1 of 125 GB file -- Size: 86GB

- Chunk 4 -- Size: 112GB

- Blob 1 -- Part 2 of 125GB file -- Size: 39GB

- Blob 2 -- 25GB file -- Size: 25GB

- Blob 3 -- 25GB file -- Size: 25GB

- Blob 4 -- Part 1 of 25GB file -- Size: 23GB

- Chunk 5 -- Size: 52GB

- Blob 1 -- Part 2 of 25GB file -- Size: 2GB

- Blob 2 -- 25GB file -- Size: 25GB

- Blob 3 -- 25GB file -- Size: 25GB

How the SDK Helper Functions Work with Chunks and Blobs

The Java and .NET/C# SDKs include “Helper” functions that make it easier to move files to and from BlackPearl. These Helper function provide a layer of abstraction over the concept of BlackPearl chunks and blobs. The client code does not need to know how to manage chunks and blobs, and therefore greatly reduces the effort to integrate a client with BlackPearl. We therefore recommend that client developers use the Helper functions whenever possible.

These Helper functions can transfer blobs in parallel threads concurrently. The number of threads can be set by the client. For example, the Java CLI, which uses the Java SDK and its Helper functions, is set to transfer 10 parallel threads. Note that there are some limitations on the ability to transfer blobs in parallel that are made up of the same file. An individual mounted file on a file system path can have multiple blobs sent to BlackPearl in parallel. But an individual file received via a file stream/channel cannot have multiple blobs sent to BlackPearl in parallel.

Blobbing and Checksums

BlackPearl performs a checksum on all files sent to it. If a file must be broken up into multiple blobs, it will perform a checksum on each individual blob. It will not perform a checksum across the entire file if the file is broken into multiple blobs. If a client application is tracking checksums for files and comparing them to BlackPearl’s recorded value for verification, it must be aware that it should compare the checksum of the blobs rather than the checksum of the entire file. A client could store the checksum of the entire file in the meta data of one of the blobs that it uploads to BlackPearl. However, BlackPearl would not use this checksum value itself. Read More About BlackPearl and Checksums

BlackPearl Performance, File Size, and Job Size

BlackPearl uses Bulk PUT and GET commands to move files to and from its storage targets -- tape and disk. We call them “Bulk” commands because they transfer multiple files in one “job”. The bulk commands provide several advantages over traditional, single-file S3 PUT and GET operations, including: 1) ensuring that the BlackPearl cache is ready to receive the files; and 2) providing adequate data to continuously write or read data on to the storage target at a high level of performance.

Many factors affect the file transfer performance to and from BlackPearl, including network configuration and equipment specifications, transfer rate capabilities from the primary storage medium, file transfer software architecture and file sizes/job size. To maximize file transfer performance (typically measured in megabytes per second) on a bulk PUT or GET job, the size of both the individual files in the job and the total job must be considered. Spectra Logic has performed considerable testing and analysis of various files sizes and has determined ideal file sizes for maximizing performance in bulk PUT and GET jobs. The results of this testing are below.

We recommend developers build BlackPearl client that target at least the “Good” numbers below. Based on this recommendation, developers will want individual files sizes in the tens of megabytes and total job sizes in the hundreds of gigabytes. Sizes smaller than these can result in significant performance degradation.

For more tips on building a BlackPearl client, see our Guidance and Tips page.

Individual Objects/Files in a Bulk PUT or GET Job

[efstable width =”100%”]

[efstable_head]

[efsth_column]Performance[/efsth_column]

[efsth_column]File Size[/efsth_column]

[/efstable_head]

[efstable_body]

[efstable_row]

[efsrow_column]Poor[/efsrow_column]

[efsrow_column]>= 2 MB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Fair[/efsrow_column]

[efsrow_column]>= 5 MB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Good[/efsrow_column]

[efsrow_column]> = 50 MB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Great[/efsrow_column]

[efsrow_column]>= 1 GB[/efsrow_column]

[/efstable_row]

[/efstable_body]

[/efstable]

Total Bulk Job Size

[efstable width =”100%”]

[efstable_head]

[efsth_column]Performance[/efsth_column]

[efsth_column]LTO-6 Tape Drives[/efsth_column]

[efsth_column]LTO-7 or TS1150 Tape Drives[/efsth_column]

[/efstable_head]

[efstable_body]

[efstable_row]

[efsrow_column]Poor[/efsrow_column]

[efsrow_column]>= 10 GB[/efsrow_column]

[efsrow_column]>= 20 GB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Fair[/efsrow_column]

[efsrow_column]>= 50 GB[/efsrow_column]

[efsrow_column]>= 100 GB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Good[/efsrow_column]

[efsrow_column]>= 200 GB[/efsrow_column]

[efsrow_column]>= 400 GB[/efsrow_column]

[/efstable_row]

[efstable_row]

[efsrow_column]Great[/efsrow_column]

[efsrow_column]>= 1 TB[/efsrow_column]

[efsrow_column]>= 2 TB[/efsrow_column]

[/efstable_row]

[/efstable_body]

[/efstable]

Performance descriptions:

Poor — Performance will have degraded by no more than one order of magnitude

Fair — Performance will have degraded by around half

Good — Performance will have degraded by around 10%

Great — Maximum/optimal performance

Preparing for the BlackPearl 3.0 Release

As mentioned in our last two blog posts (see Part 1 and Part 2), BlackPearl 3.0 will be released soon and will include some exciting new features. Shortly after BlackPearl 3.0 is released, we will be also be releasing associated updates to our Software Development Kits (SDKs), which are available in Java, C#/.NET, C and Python. BlackPearl client developers should understand how to prepare for this new release.

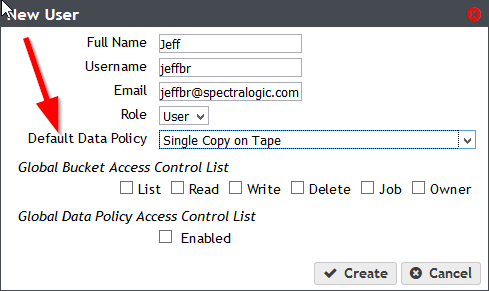

For the most part, BlackPearl clients using the existing 1.x SDKs should function normally with BlackPearl 3.0. The one exception to this is the 1.x Java SDK, which will require a small patch in order to work with BlackPearl 3.0. We will be releasing this patch, which will be Java SDK Release 1.2.1, soon and will announce it on this Blog once it becomes available. Also, if your client programmatically creates buckets, you should ensure that the user account the client uses to create the bucket has a default data policy (see screen image below).

When BlackPearl 3.0 is released, we will also be releasing a new version of the BlackPearl Simulator so that developers can test their client code against the new release before upgrading any actual BlackPearl systems. We are recommending this because there have been significant changes and additions to the 3.0 Application Program Interface (API) and, while it is our goal to be fully backward compatible with 1.x clients, client integration testing to ensure your client’s full compatibility with BlackPearl 3.0 would be wise.

The SDKs provide a layer of abstraction over the HTTP-based, RESTful API commands of the BlackPearl. The current 1.x SDKs provide access to only a subset of the most popular BlackPearl API commands. This is because each API command had to be manually programmed in the SDK by our Engineering Team, and it was too time intensive to write SDK commands for all API commands. So while the 1.x SDKs have commands for common actions such as moving files to and from BlackPearl and creating buckets, they did not have commands for less common actions such as inspecting and ejecting tapes.

With our 3.0 SDKs, we developed a technique to automatically generate most of the SDK code needed for each associated API command. This means that almost every BlackPearl API command will have an associated command in each of the SDKs. So developers using the SDKs will now be able to access nearly all API commands via the SDKs, including new features such as Advanced Bucket Management and Access Control Lists. We will also continue to make the SDKs easier to use as we have already done, such as adding the “helper” functions (currently available in the Java and .NET SDKs) that further simplify the archive and restore process.

There are three areas of change that developers should be aware of when upgrading their client from 1.x to 3.0:

- Due to the nature of the 3.0 SDKs, and the fact that they support nearly all BlackPearl API commands, we had to restructure the SDK code base compared to the 1.x SDKs. The BlackPearl API supports Standard S3 as well as Spectra S3 commands, and in some cases there are separate commands for each protocol (Standard S3 versus Spectra S3) that do essentially the same thing. So for example, there is a Standard S3 command to create a bucket and a separate Spectra S3 command to create a bucket, each with different input parameters. In order to make both of these commands available in the SDKs, we had to create separate namespaces for each protocol. Therefore, if you are updating your code from 1.x to 3.0, you will likely have to append the appropriate namespace in your code to each command. We will provide specific examples once the 3.0 SDKs are released.

- Some method names will have to be changed between 1.x and 3.0. We will provide a full list of those name changes.

- There will be a few methods where the ordering of arguments will have to be changed. We will provide a list of of those methods.

The new 3.0 SDKs will also include a new set of documentation and code examples.

Developers should make sure that they are planning for the BlackPearl 3.0 release. Those upgrading from 1.x to 3.0 and who are not changing client functionality should expect it to be straightforward. For those wanting to enhance their clients to take advantage of new 3.0 features, our Developer website will be available for help. Look for more information on this Blog once BlackPearl 3.0 is released.