Spectra BlackPearl includes an Application Program Interface (API) that allows applications to interact with BlackPearl. Spectra Logic has created a number of Software Development Kits (SDKs) in various programming languages, including Python 3, which act as an overlay to the API and make it easier to interact with the API in the selected programming language.

For the Python 3 SDK, documentation has been provided that explains how to use the commands. This documentation is automatically generated from the code. While the documentation is very helpful, it can be difficult to interpret. The example below should assist you in using the documentation.

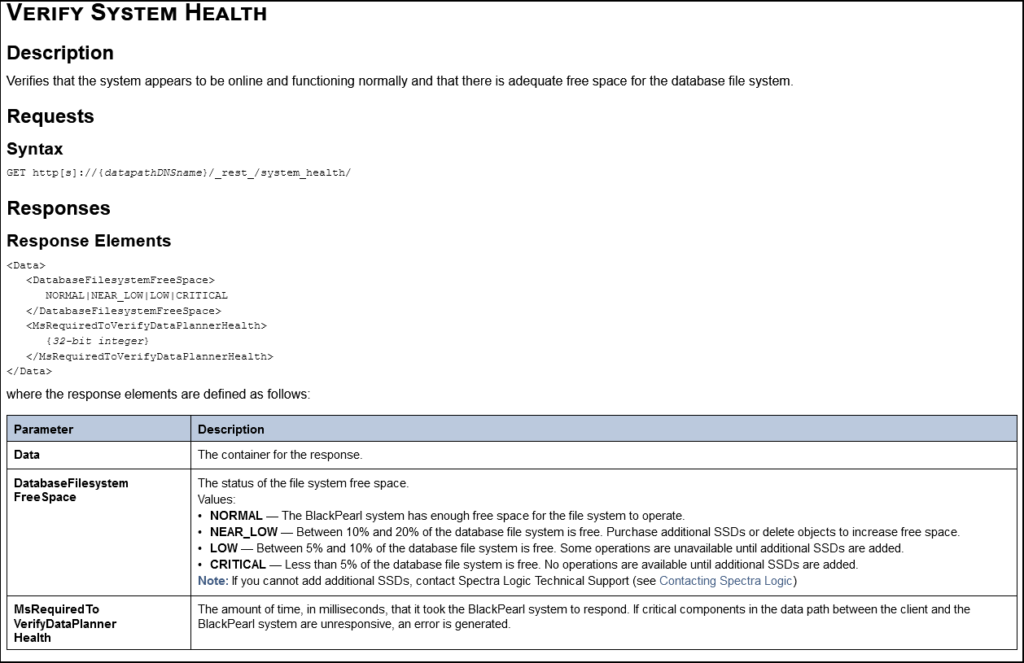

The first place to start is in the API documentation. You should first review this API documentation and determine which command you wish to use. As an example, perhaps you want to determine the BlackPearl system health. This would be done with the Verify System Health API command. The API documentation for this page includes the command syntax and the request and response parameters, as shown below.

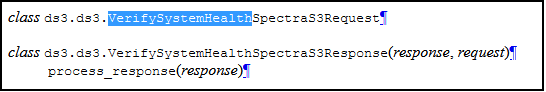

You next need to find the corresponding Python components for this command in the Python documentation. As the API command in the API documentation is titled “Verify System Health”, we now first want to search the Python documentation page for “VerifySystemHealth” (no spaces). You can usually use Ctrl+F or Command+F to search a web page for text. Your search should take you to the part of the page with the associated Python classes for this command, as shown below.

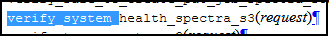

We now need to search the Python documentation page again for the associated function call. Again, as the API command in the API documentation is titled “Verify System Health”, we this time want to search the Python documentation page for “verify_system_health” (all lower case, replace spaces with underscores). We can now see the function name for this API command, as shown below:

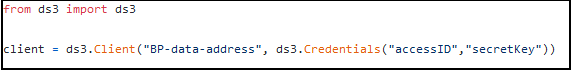

Now we will create the Python code to call the Verify System Health command. We first need to make sure that we import the SDK library and that we create a “client” object for the BlackPearl we are calling:

You will need to provide the actual credentials for your BlackPearl system.

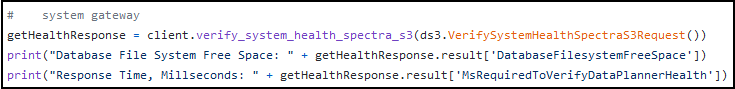

Next we will create the Python code for the Verify System Health call:

We can review the various components of the code:

- getHealthResponse – this is an arbitrary variable name that you choose

- client – this is the client variable from above when we created the client object

- verify_system_health_spectra_s3 – this is the function name we found on the Python documentation page

- ds3.VerifySystemHealthSpectraS3Request() – ds3 is the SDK code library; VerifySystemHealthSpectraS3Request is the class name we found on the Python documentation page

- DatabaseFilesystemFreeSpace – this is the response parameter we found on the API documentation page

- MsRequiredToVerifyDataPlannerHealth – this is the response parameter we found on the API documentation page

Hopefully this example will help you use the documentation to create Python scripts and applications for BlackPearl.