In Part 1 of my BlackPearl 3.0 blog post, I discussed the new storage mediums and data policies that can be used with BlackPearl. Today I focus on another new feature of BlackPearl 3.0, Access Control Lists (ACLs). As the name implies, with ACLs you will be able to control what type of access users and applications have to the data in BlackPearl.

The concept of ACLs is not new. Amazon has been using ACLs with its public cloud storage for some time. BlackPearl’s ACL features are very similar, but not identical, to Amazon’s ACLs. These differences are due to the nature of a private cloud (BlackPearl) versus a public cloud system.

ACLs and Buckets

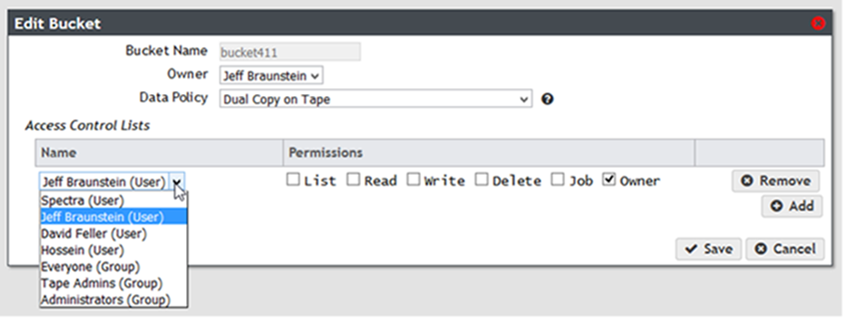

In BlackPearl, ACLs are primarily used to control permissions on objects in buckets, which is a top-level container in BlackPearl. Bucket permissions can be granted to a user or a group.

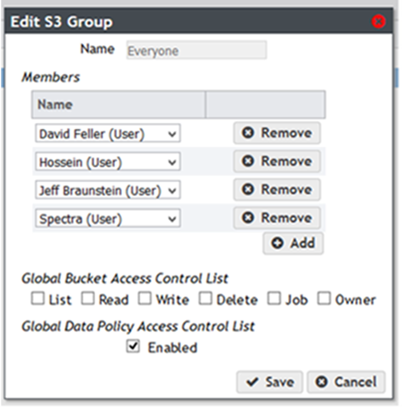

Groups are a new feature in BlackPearl 3.0, and can consist of users and other groups. Groups can be managed on the Users page in the web management interface. BlackPearl 3.0 ships with two default groups, “Everyone” and “Administrators”, and more groups can be created.

The following permission(s) can be granted to a user or group on a bucket:

- List – List all objects in a bucket

- Read – Download (GET) objects from a bucket

- Write – Upload (PUT) objects to a bucket

- Delete – Delete objects from a bucket

- Job – Modify or cancel jobs associated with the bucket, even if the job wasn’t created by that user or group

- Owner – Full control of bucket. Includes all permissions above. By default the user that creates the bucket is given Owner permissions on that bucket

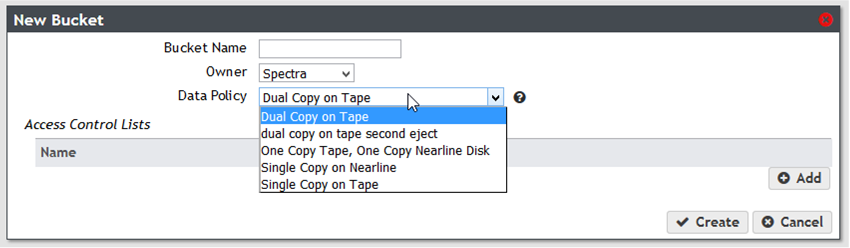

When a bucket is created or edited in the web management interface, ACLs can be set on the bucket as shown in the first image below. A typical use case might be that you want to give full access to a bucket to certain users and only read access to other users. In this case you could create a “Full Access” group and set an ACL giving that group “Owner” permissions on the bucket. You could then create another group called “Read Only Access” and set an ACL giving that group “List” and “Read” permissions.

Global Permissions

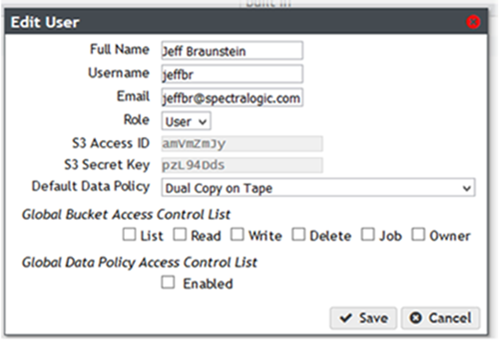

Users and groups can be granted “Global” ACLs on all buckets. So for example, a user or group could be given “Read” permission on all objects in all buckets in BlackPearl. These settings are controlled in the “Global Bucket Access Control List” in the User and Group settings as shown in the two images below.

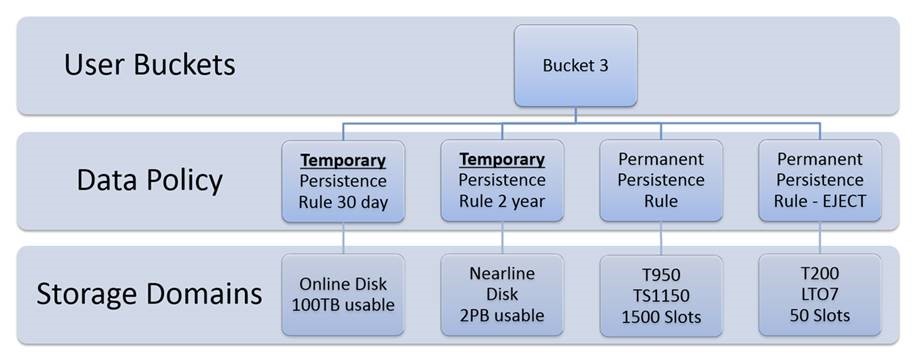

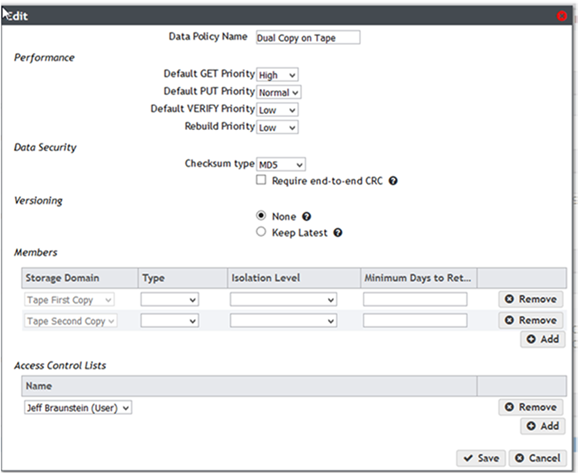

ACLs and Data Policies

As mentioned Part 1 of this blog post, data policies can be set on buckets that control how many copies of the objects are kept on each storage medium and for how long. In some cases, you may only want certain users to have access to use certain data policies when creating a bucket. BlackPearl allows you to set ACLs on data policies that allow you to control this access. BlackPearl 3.0 includes a built-in “Everyone” group, and members of this group by default have global permissions to use all data policies as shown by the checked box in the image above. This means that by default all users can access all data policies. However, administrators can remove this setting and only let certain users access certain data policies, as shown below.

ACLs in the API and SDKs

All the ACL settings that are available in the web management interface, as shown in the screens above, can also be controlled using the BlackPearl API and SDKs. So for example, not only can a bucket be programmatically created, but the ACLs on the bucket can also be programmatically set.

Coming Up Next

In my next blog post, I’ll talk about how developers will be able to use the new BlackPearl 3.0 features via the API and SDKs. I’ll also explain how developers that have are already built or in the process of building clients can prepare to migrate to BlackPearl 3.0.